RAG Revolution 2025: Retrieval-Augmented Generation for Enterprise-Grade Accuracy

Decoding RAG architecture and showing how Fracto’s Fractional CTOs deploy it safely.

Large-Language-Models (LLMs) thrill users but hallucinate up to 25% of the time—fatal for FinTech risk engines or HealthTech patient portals. Retrieval-Augmented Generation (RAG) fixes the flaw by grounding generation with authoritative data. The RAG market will soar from US$1.24 B in 2024 to US$67.42 B by 2034, a 49.1% CAGR. (1,2)

Why Hallucinations Hurt?

- FinTech: False regulatory advice risks seven-figure fines.

- Healthcare: Wrong dosage guidance jeopardises patient safety.

- LegalTech: Fabricated case law invites sanctions from courts.

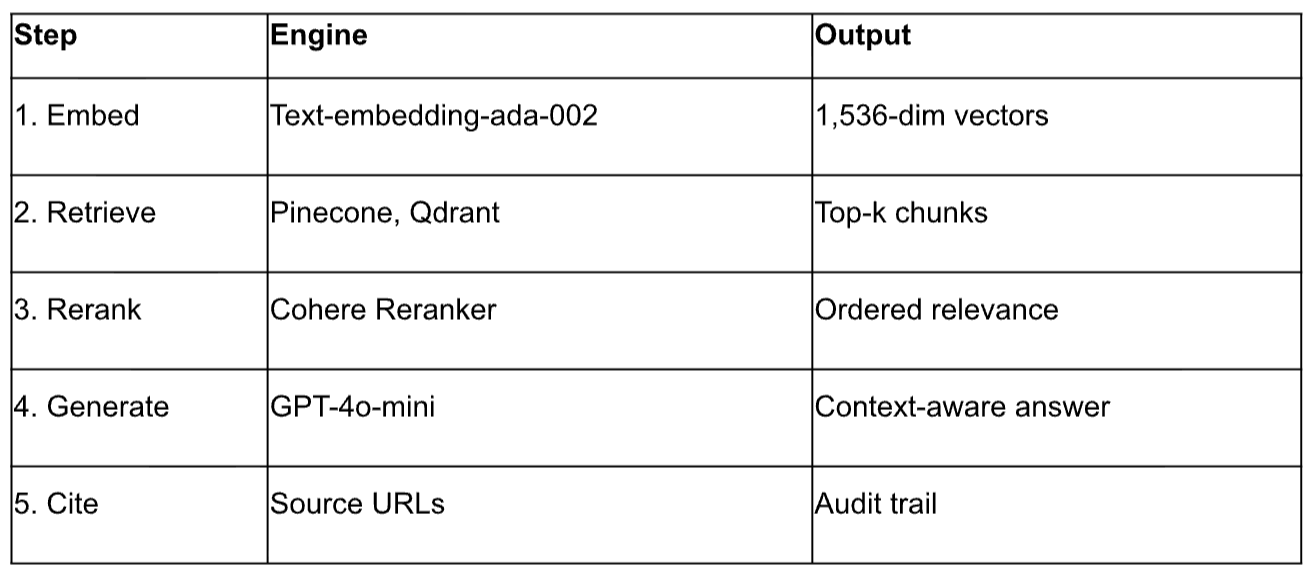

How RAG works?

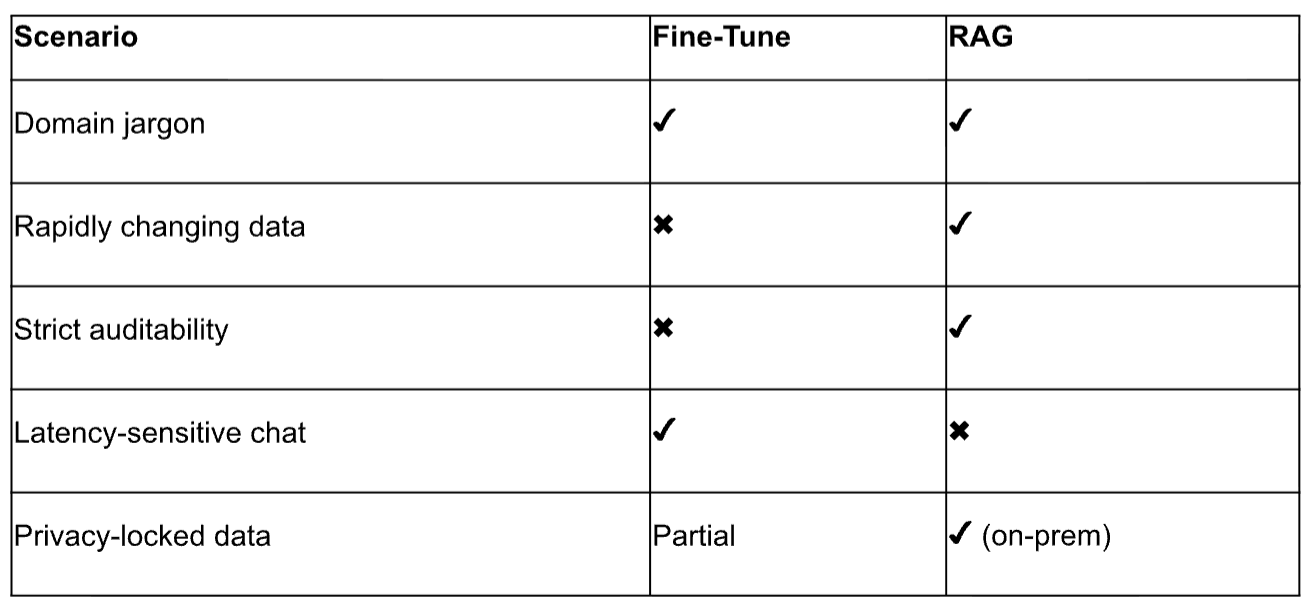

When to fine-tune vs use RAG?

Compliance-First RAG Checklist

- Data-classification labels on all retrieved docs.

- Access control integrated with Okta or Azure AD.

- Redaction layer for PII before vector storage.

- Continuous evaluation: factuality, toxicity, bias metrics.

Performance Benchmarks

- 40–70% hallucination reduction in Fracto pilots (1,2).

- 35% faster customer resolution versus non-RAG chatbots.

- 30% higher conversion on FinTech onboarding flows.

Future Roadmap

- Agentic RAG: Autonomous agents that plan multi-step research tasks (3,4).

- Multimodal RAG: Combining text, images, and tabular data for richer answers.

- Edge-RAG: On-device retrieval for privacy-critical mobile apps.

90-Day RAG Deployment Plan

- Week 1–4: Corpus inventory & data-quality scoring.

- Week 5–8: Vector DB provisioning & embedding pipeline.

- Week 9–12: Prompt-template design, feedback tooling, and A/B pilot.

Claim Fracto’s no-cost RAG Feasibility Workshop—discover how to cut hallucinations in half.

Build your dream

Bring your ideas to life— powered by AI.

Ready to streamline your tech org using AI? Our solutions enable you to assess, plan, implement, & move faster

Know More

Know More

Email us on info@fracto.ie or submit a contact form.

Fracto by W3Blendr Ltd.

Athlone, Co. Westmeath,

Republic Of Ireland